Distributed Processing

22/Aug 2008

This year our company introduced a nice tradition - lucky folks who went to GDC referred talks they had attended to interested people. One of workmates presented Bungie’s conference - ‘Life on the Bungie Farm: Fun Things to Do with 180 Servers’. Basically, it’s an interesting talk about Bungie’s distributed build system (deals with code builds, lightmap calculations, resource packaging and some other tasks). It’s not a new concept of course, one popular commercial example of similar environment is XGE. Still, it intrigued me enough to give it a shot and experiment with it a little bit.

Obviously, I dont have 180 servers at home. Actually, there are two machines: my laptop and trusty, ~4 years old desktop. It should be enough for our simple experiments anyway.

Let’s start with rough outline of the system:

there’s one server application and N clients. For the present there are only ‘worker’ clients (ie, machines that perform work), all tasks are triggered directly from the server. It could be more handy to have clients connecting to server just to launch certain tasks, but this should be trivial to add.

server deals only with distributing tasks between clients. Roughly speaking, task is described by executable and list of files it should process. Example of task can be converting textures from .TGA to .DDS or calculating lightmaps for all the levels.

tasks are defined in XML file which resides on the server only. It would be a little bit more efficient to store it on client machines as well, but I wanted to avoid it. This way there’s only one copy of configuration file, if it changes you dont have to update other computers, it only have to be reloaded on the server (it can be even done without exiting the application). All the necessary information is sent over the net. For details on XML format see attached test.xml file and/or Task.cs. Basically, task is defined by executable and list of entries. Entries can be divided into groups if different groups require different command line arguments (see test.xml again).

all the communication is done using sockets. It’s not as reliable as Bungie’s solution (tasks are sent via SQL database), but worked OK for my purposes.

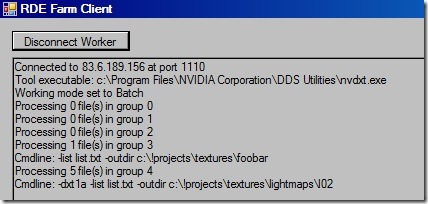

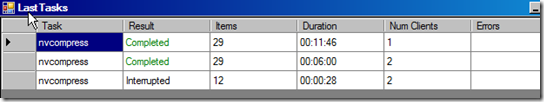

tasks can be batched or processed one-by-one. In most cases it’s safe to go with the latter versions. There are some tools however (like NVDXT) that can work with file containing list of all entries to process. In such case each client builds its own list which is later passed to the tool. Batched tasks are more prone to problems when client disconnects before finishing (there’s no mechanism for dealing with this right now). Whole batch wont be processed in such case. One-by-one processing mode may also result in better load balance, especially if certain entries take much more time to process than others. If client’s done, it asks for more work, so the system auto-balances itself.

there’s no ‘environment virtualization’ as in XGE (I suspect it may be fancy name for copying files over the net to the same paths as on the source machine, but not sure), so source files have to be obtained somehow, usually. In my tests I used pre-build step to grab textures from SVN, then commited DDS files in post-build. Worked like a charm. There probably should be support for interrupting task when pre-build step fails, I’ll leave it as en excercise for the reader.

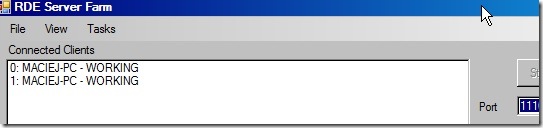

Some (not so) pretty pictures. First, we have a server with two clients connected:

Code + sample XML can be downloaded here. BTW, as I add more code projects it becomes more and more difficult to find them among ‘normal’ posts. In the near future I’ll try to create simple page listing all the source snippets published here so far.

Appendix 1

Included test.xml file contains three tasks:

- converting .TGA/.JPG to .DDS via nvcompress,

- converting .TGA/.JPG to .DDS via nvdxt,

- building RDE core library (my personal test’)

Conversion tasks will try to convert all *.tga and *.jpg files from c:!projects\textures directory to *.dds. There are 3 entry groups:

- lightmaps. *.jpg files in c:!projects\textures\lightmaps and subdirectories. They’re converted to DXT1. If paths arent absolute they’re relative to working directory.

- all files (.) from c:!projects\textures\Foo directory. No extension specified, so . is assumed. Output directory is set to c:!projects\textures\foobar (not that I needed it, just to make things more complicated).

- all other .tga/.jpg files from working directory.

Note: group order is important. File isnt added to the list if it’s already present there (added by some previous group). That’s why server tries to sort group from ‘most specific’ to ‘most general’, so you dont have to worry about it. File names are most specific, then directories, then extensions.

PS. New Metallica’s out!

Old comments

SirMike 2008-08-23 12:09:18

Ideas are quite nice but your C# code looks very messy (many antipatterns). There are plenty of places with bad C++ habits ;)

admin 2008-08-23 12:54:48

I can only imagine. I treat C# as C++ with different syntax. Luckily for my workmates I only use it at home :)

ed 2008-08-23 14:00:55

Other feature to add is to threat N-core workers as N workers with smart caching (for situations where 8 cores will calculate 8 lightmaps for same scene). The other nice feature to implement whould be integrating tools with server for progress display and feedback from server to clients for progress and status reports.

admin 2008-08-24 21:19:38

Added simple progress tracing on per-entry level. Without tool support that’s about as far as we can go with details.

js 2008-08-25 10:26:31

be careful, treating N-core as N machine is wrong.

You might have N-core, but the machine I/O pipelines are saturated which may ruins performances, especially for pre-processors

ed 2008-08-25 23:10:54

Yes, but if single machine cannot handle I/O for 2-4 tasks then you don’t need server cluster but fast disks :) 4-8 core machines are good at tasks like compressing textures, generatig lightmaps, optimizing meshes etc. Those tasks take usually much more than load/save time.

realtimecollisiondetection.net - the blog » Posts and links you should have read 2008-09-02 15:33:17

[…] Sinilo wrote a really interesting post about Distributed Processing. This is something we’ve been looking into at work a lot, wanting to do parallel/distributed […]

Recent URLs tagged Bungie - Urlrecorder 2009-02-08 12:46:38

[…] recorded first by Henriatta2468 on 2009-01-31→ Distributed Processing […]