Rust pathtracer

14/Nov 2014

Last year I briefly described my adventure with writing a pathtracer in the Go language. This year, I decided to give Rust a try. It’s almost exact 1:1 port of my Go version, so I’ll spare you the details, without further ado - here’s a short list of observations and comparisons. As previously, please remember this is written from position of someone who didn’t know anything about the language 2 weeks ago and still is a complete newbie (feel free to point out my mistakes!):

Rust is much more “different” than most mainstream languages. It was the first time in years that I had to spend much time Googling and scratching my head to get a program even to compile. Go’s learning curve seemed much more gentle. One reason is that it’s still a very young, evolving language. In many cases information you find is outdated and relates to some older version (it changes a lot, too), so there’s lots of conflicting data out there. The other is unorthodox memory management system that takes a while to wrap your head around.

As mentioned - it still feels a little bit immature, with API and language mechanisms changing all the time. I’m running Windows version which probably makes me lagging behind even more. Enough to say, it’s quite easy to get a program that crashes when trying to start (and I’m quite sure it’s not the Rust code that causes the issues, it’s the generated binary.. It crashes before printing even the first message). It’s always the same code, too, accessing some spinlock guarded variable, it seems:

8B 43 08 mov eax,dword ptr [rbx+8] A8 01 test al,1 0F 85 B5 47 FD FF jne 00000000774C2C74 8B C8 mov ecx,eax 2B CD sub ecx,ebp F0 0F B1 4B 08 lock cmpxchg dword ptr [rbx+8],ecx 0F 85 9B 47 FD FF jne 00000000774C2C69 48 8B 03 mov rax,qword ptr [rbx] 4C 89 AC 24 C0 00 00 00 mov qword ptr [rsp+0C0h],r13 33 ED xor ebp,ebp 45 33 ED xor r13d,r13d 48 83 F8 FF cmp rax,0FFFFFFFFFFFFFFFFh 74 03 je 00000000774EE4E7 FF 40 24 inc dword ptr [rax+24h]Memory management, which is one of its distinctive features is both interesting & confusing at first. They seem to go back & forth on optional GC, but the canonical way is to use one of few types of smart pointers. Memory leaks and other issues are detected at compile time. Rust compiler in general is pretty good at detecting potential problems, once it builds, it’ll probably run fine. The only runtime error I encountered in my app was out of bounds array access. (It’s a good thing too as I’ve no idea how to debug my application… Don’t think there’s a decent debugger for Windows)

Unlike Go, it has operator overloading, but syntax is a little bit confusing to be honest. You don’t use the operator itself when overloading, you have to know what function name it corresponds to. E.g. operator-(Vector, Vector) is:

impl Sub<Vector, Vector> for Vector { fn sub(&self, other: &Vector) -> Vector { Vector { x : self.x - other.x, y : self.y - other.y, z : self.z - other. z} } }

Not sure what’s the rationale behind this, but it’s one more thing to remember.

- Changing pointer types feels cumbersome at times. For example, let’s imagine we want to change a boxed pointer:

struct Scene { camera : Box, }

to a reference. We now have to provide a lifetime specifier, so it changes to:

struct Scene<'r>

{

camera : &'r mut Camera,

}…and you also have to modify your impl block (2 specifiers):

impl<'r> Scene<'r>It seems a little bit redundant to me, even in C++ it’d be a matter of changing one typedef.

[EDIT, forgot about this one initially] Comparing references will actually try to compare referenced objects by value. If you want to compare the actual memory addresses you need to do this:

if object as *const Sphere == light as *const Sphere // compares ptrs if object == light // compares objectsRust code is almost exactly same length as Go, around 700 lines

Running tracer in multiple threads took more effort than with Go. By default, Rust’s tasks spawn native threads (was a little surprised when I opened my app in the Process Hacker and noticed I had 100+ threads running), you need to explicitly request “green” tasks. It also involved way more memory hacks. By default, Rust doesn’t allow for sharing mutable data (for safety reasons), the recommended way is to use channels for communication. I didn’t really feel like copying parts of framebuffer was a good idea, so had to resort to some “unsafe” hacks (I still clone immutable data). I quite like this approach, it’s now obvious what data can be modified by background threads. I’m not convinced I chose the most effective way, though, there’s no thread profiler yet (AFAIK).

Performance. Surprisingly, in my tests Rust was quite a bit slower than Go. Even disregarding the task pool/data sharing code, when running from a single thread, it takes 1m25s to render 128x128 image using Go and almost 3 minutes with Rust. With multiple threads, the difference is smaller, but still noticeable (43s vs 70s). [EDIT] Embarrassingly enough, turned out I was testing version with no optimizations. After compiling with -O Rust now renders the same picture in 34s instead of 70 (and 1m09s with 1 thread). I’ll leave the old figures, just to show that debug build still runs with decent speed.

Random stuff I liked:

data immutable by default. It makes it immediately obvious when it’s modified. E.g. let r = trace(&mut ray, &context.scene, &context.samples, u1, u2, &mut rng); Can you tell what’s modified inside trace function?

as mentioned - compiler is very diligent, once the app builds, there’s a good chance it’ll run fine. Error messages are clear & descriptive

pattern matching

I realize it might seem like I’m mostly praising Go and bashing Rust a little bit here, but that’s not the case. I’ll admit I couldn’t help but think it seemed immature compared to Go, but I realized it’s an unfair comparison. Go has been around for 5 years and I only started using it 12 months ago, so they had lots of time to iron out most wrinkles. Rust is a very ambitious project and I definitely hope it gains more popularity, but for the time being Go’s minimalism resonates with me better. I mostly code in C++ at work, this is a language that offers you 100 ways of shooting yourself in the foot. Working with Go, where it’s usually only one true way (and it involves blunt tools) is very refreshing. Rust sits somewhere in-between for the time being, it’ll be fascinating to see where it ends up.

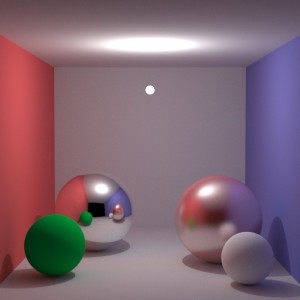

Obligatory screenshot:

Source code can be downloaded here.

Old comments

ph0nk 2014-11-14 11:50:36

Do you have a C++ version of it, and what are the performances compared to those Go and Rust implementations? Just for information :)

Arthur 2014-11-14 14:00:13

I didn’t modify your code just adjusted it to compile in the Rust nightly.

Generating a 128x128 image with 16x16 samples per pixel.

Rust (rustc -O): 10s

Go (go build): 37s

Generating a 256x256 image with 16x16 samples per pixel.

Rust (rustc -O): 40s

Go (go build): 2m39s

Rust source: http://paste2.org/FCvdbKpC

Go source: http://paste2.org/cmHJfWYw

i7-2670QM CPU @ 2.20GHz – Linux Mint X64

also on HN https://news.ycombinator.com/item?id=8606261

J 2014-11-14 20:47:38

Addressing specifically your points about data parallelism and threads:

* Unlike in Go, green threads in Rust aren’t recommended for most code (and are usually going to be slower) and have been removed from the standard library.

* Channels aren’t as universally idiomatic as in Go (I actually wouldn’t say they’re particularly encouraged over other synchronization mechanisms) since Rust enforces thread safety in its type system. This includes language support for data parallelism like what you’re doing, although this isn’t exposed by the standard library yet. In particular, safe data parallelism over a vector is something that it is definitely going to support (without unsafe in the interface).

J 2014-11-14 20:49:01

Er, sorry, green threads haven’t been removed yet, but it’s planned.

admin 2014-11-15 01:50:54

@ph0nk - no, not really. I’ve enough C++ at work, try to avoid it at home :)

@Arthur - thx for benchmarking. I’m on 0.13.0 nightly (Oct 10), so fairly recent, but that’s a big difference.

@J - I’ll actually miss green threads. In this case, are we expected to spawn just 4 big tasks (which might balance terribly) or write a scheduler ourselves? Good to hear about planned data parallelism support.

Arthur 2014-11-16 13:56:06

Maybe you forgot to turn on optimizations (-O).

admin 2014-11-16 21:52:29

Doh, that’s embarrassing, you’re completely right. Updated results.